DGX Systems

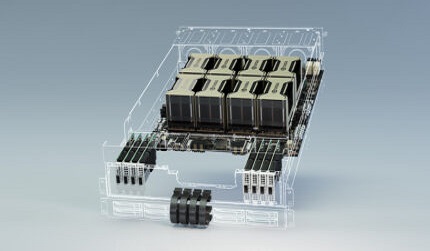

DGX System is a family of high-performance computing systems produced by NVIDIA, a technology company specializing in graphics processing units (GPUs) and artificial intelligence (AI) computing. DGX systems are designed to accelerate AI workloads and enable faster and more efficient deep learning training and inference.

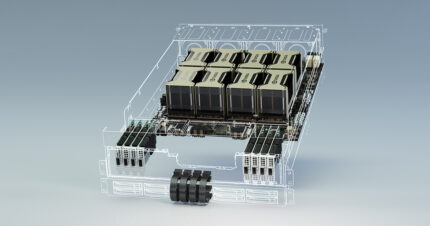

The DGX systems are built with NVIDIA’s GPU technology, including the latest NVIDIA A100 Tensor Core GPUs, which are optimized for AI and deep learning workloads. The systems also include NVLink interconnects, which enable high-speed communication between GPUs, as well as high-speed networking and storage.

DGX systems come pre-installed with NVIDIA’s software stack, including CUDA, cuDNN, and TensorRT, which are optimized for deep learning workloads. The systems also include containerized software environments like NVIDIA’s NGC (NVIDIA GPU Cloud) and Deep Learning Containers, which provide access to a wide range of pre-configured deep learning frameworks and applications.

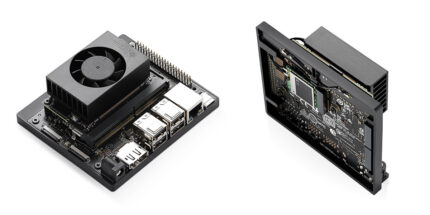

The DGX systems are available in several configurations, including the DGX A100, DGX Station A100, and DGX POD. The DGX A100 is a standalone system designed for data centers and large-scale AI workloads, while the DGX Station A100 is a smaller form factor system designed for individual data scientists and AI researchers. The DGX POD is a reference architecture for building large-scale AI infrastructure using multiple DGX systems.

DGX systems are used in a variety of industries, including healthcare, finance, automotive, and manufacturing, for tasks like natural language processing, computer vision, and speech recognition. The systems are designed to provide high-performance computing power and enable faster time-to-insight for AI projects.

The DGX system is a high-performance computing platform designed for AI and machine learning workloads. It is typically used in data centers and other enterprise environments to accelerate the development and deployment of AI applications. While the DGX system is not specifically a networking solution, it does have networking components that are important to its overall functionality and performance.

The DGX system typically includes multiple GPUs (graphics processing units), along with other hardware components such as CPUs, memory, and storage. To provide connectivity between the DGX system and other devices in the data center, it includes multiple network interfaces, typically 10G or 25G Ethernet. These network interfaces are used to transmit data between the DGX system and other devices, such as storage arrays, other compute nodes, or external networks.

The networking components in the DGX system are designed to provide high-speed, low-latency connectivity, which is critical for AI workloads that require massive amounts of data to be processed in real-time. The network interfaces in the DGX system typically support technologies such as Remote Direct Memory Access (RDMA) and Message Passing Interface (MPI), which enable high-performance data transfers between nodes in a cluster.

In addition to the network interfaces in the DGX system itself, the overall performance of the system is also impacted by the network infrastructure in the data center. To ensure optimal performance of the DGX system, the network infrastructure must be designed to provide high-speed, low-latency connectivity between all devices in the data center. This may include technologies such as high-speed Ethernet switches, fiber-optic cabling, and software-defined networking (SDN) solutions.

In summary, the DGX system is not specifically a networking solution, but it does rely on networking components to provide high-speed, low-latency connectivity between devices in a data center environment. The networking components in the DGX system are designed to provide optimal performance for AI workloads, and the overall performance of the system is impacted by the quality and design of the network infrastructure in the data center.

Reviews

There are no reviews yet.